Section 2.5 Finite Differences Method: Elliptic PDEs

The most elementary Elliptic PDE,

\begin{equation*}

-\Delta u = \rho\;\;\hbox{ in }S\,,\;\;u|_{\partial S}=f,

\end{equation*}

is named Poisson Equation or, when \(\rho=0\text{,}\) Laplace Equation. More explcitly, the PDE above writes as

\begin{equation*}

-u_{xx} -u_{yy} = \rho\;\;\hbox{ in }S\,,\;\;u|_{\partial S}=f,

\end{equation*}

Elliptic PDEs arise in many different context in all sciences. As a concrete example, we illustrate below in some detail the case of electrostatic.

Example: electrostatic electric potential. Maxwell's laws in the empty space

\begin{gather*}

{\mathbf\nabla}\cdot\mathbf E = \frac{\rho}{\varepsilon_0}

\,,\;\;\\

{\mathbf\nabla}\times\mathbf E = -\frac{\partial\mathbf B}{\partial t}\,,\\

{\mathbf\nabla}\cdot\mathbf B = 0

\,,\;\;\\

{\mathbf\nabla}\times\mathbf B = \mu_0(\mathbf J+\varepsilon_0\frac{\partial\mathbf E}{\partial t})

\,,\;\;

\end{gather*}

describe completely any electrodynamical phenomenon where \(\mathbf E\) is the Electric field, \(\mathbf B\) the Magnetic field, \(\rho\) the density of charges and \(\mathbf J\) the density of currents. The constants \(\varepsilon,\mu\) are physical constants related respectively to electricity and magnetism. In case of static fields, the two first Maxwell's laws reduce to

\begin{equation*}

{\mathbf\nabla}\cdot\mathbf E = \frac{\rho}{\varepsilon}

\,,\;\;

{\mathbf\nabla}\times\mathbf E = \,,

\end{equation*}

from which we find that

\begin{equation*}

\mathbf E=-{\mathbf\nabla} V

\,,\;\;

-\Delta V=\frac{\rho}{\varepsilon}\,,

\end{equation*}

where \(V\) is the electric potential function associated to the (conservative) electric vector field \(\mathbf E\text{.}\) Hence, the electric static potential satisfies a Poisson equation where at the right-hand-side we have the density of charges.

Subsection 2.5.1 CSCY

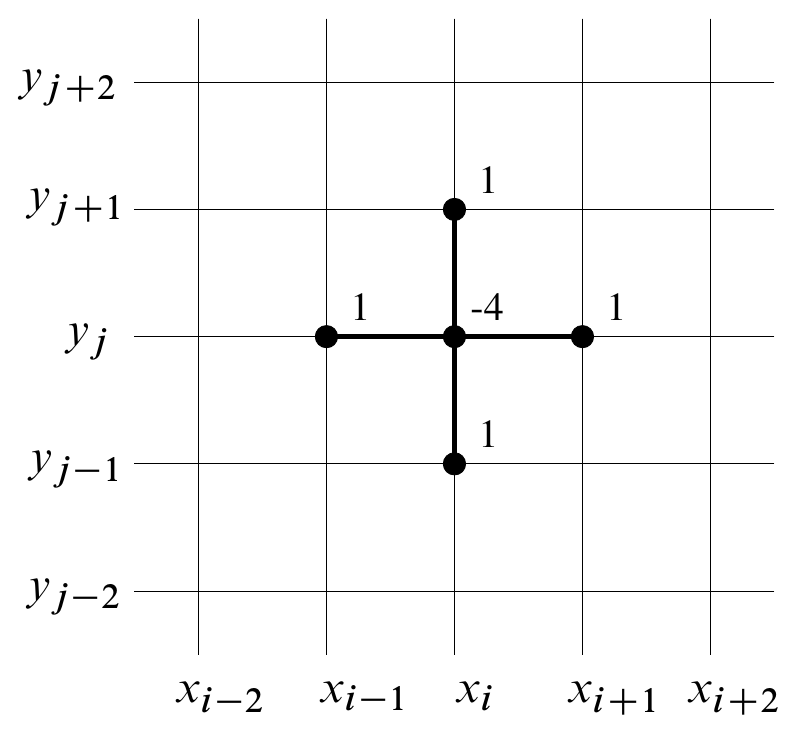

In this Chapter we discuss only one numerical method, which we call CXCY because we use central differences for the second derivatives in both \(x\) and \(y\text{.}\) As usual, we replace the pts of \(S\) with the lattice \(\{i\Delta x,j\Delta y\}, 1\leq i,j\leq N\text{,}\) and we use the approximations

\begin{equation*}

u_{xx}(x_i,y_j)\to\frac{u_{i+1,j}-2u_{i,j}+u_{i-1,j}}{\left(\Delta x\right)^2}\text{,}

\end{equation*}

\begin{equation*}

u_{yy}(x_i,y_j)\to\frac{u_{i,j+1}-2u_{i,j}+u_{i,j-1}}{\left(\Delta y\right)^2}\text{.}

\end{equation*}

Then the Poisson PDE \(-\Delta u=f\) translates into

\begin{equation*}

\frac{u_{i+1,j}-2u_{i,j}+u_{i-1,j}}{\left(\Delta x\right)^2}

+

\frac{u_{i,j+1}-2u_{i,j}+u_{i,j-1}}{\left(\Delta y\right)^2}

=

f_{i,j}

\;,\;\;

1\leq i,j\leq N,

\end{equation*}

where we set \(f_{ij} = f(x_i,y_j)\text{.}\) We set \(\Delta x=\Delta y=h\text{,}\) so we get the following system:

\begin{equation*}

u_{i+1,j}+u_{i,j+1}-4u_{i,j}+u_{i-1,j}+u_{i,j-1}= h^2f_{i,j}.

\end{equation*}

This Finite Differences scheme can be summarized by the 5-points stencil diagram below:

\begin{equation*}

M{\mathbf v}=\mathbf F,

\end{equation*}

where \(\mathbf F\) is the vector of the \(h^2f_{i,j}\) in lexicografic order and

\begin{equation*}

M = \begin{pmatrix}

B&-I&&&&&0\\

-I& B&-I&&&&\\

&.&.&.&&&\\

&&.&.&.&&\\

&&&.&.&.&\\

&&&&-I&B&-I\\

0&&&&&-I&B\\

\end{pmatrix}

\end{equation*}

is a \(N^2\) block matrix, where \(I\) is the \(N\times N\) identity matrix and

\begin{equation*}

B = \begin{pmatrix}

4&-1&&&&&0\\

-1& 4&-1&&&&\\

&.&.&.&&&\\

&&.&.&.&&\\

&&&.&.&.&\\

&&&&-1&4&-1\\

0&&&&&-1&4\\

\end{pmatrix}.

\end{equation*}

Local Truncation Error. Let \(u(x,y)\) be the exact solution of the Poisson equation \(\Delta u=f\) and denote by \(\mathbf U\) the vector of all \(u(x_i,y_j)\) in lexicographic order. The vector \(\;\;\mathbf\tau = M\mathbf U-\mathbf F\;\;\text{,}\) whose components are

\begin{equation*}

\tau_{i,j}=\frac{u(x_{i-1},y_j)+u(x_{i+1},y_j)+u(x_{i},y_{j-1})+u(x_{i},y_{j+1})-4u(x_i,y_j)}{h^2}-f(x_i,y_j)\,,

\end{equation*}

represents the local truncation error for the 5-points stencil method. Via Taylor expansion we see that

\begin{equation*}

\tau_{i,j}=\frac{h^2}{12}\left[u_{xxxx}(x_i,y_j)+u_{yyyy}(x_i,y_j)\right]+O(h^4)

\end{equation*}

Notice that, in particular, this shows that the CXCY method is consistent. Hence its convergence is equivalent to its stability.

Global Error. Let \(\mathbf u = \hat u_{i,j}\) be the solution of the system \(M\mathbf u=\mathbf F\text{.}\) Then

\begin{equation*}

\mathbf E= \mathbf U-\mathbf u

\end{equation*}

is the global error of the method. This vector satisfies the equation

\begin{equation*}

M\mathbf E = M\mathbf U-M\mathbf u = \mathbf\tau +\mathbf F - \mathbf F = \mathbf\tau\,,

\end{equation*}

namely

\begin{equation*}

\mathbf E = M^{-1}\mathbf\tau\,.

\end{equation*}

Stability and Accuracy. Recall that the numerical solution of the Poisson equation through this method is

\begin{equation*}

\mathbf u = M^{-1}\mathbf F

\end{equation*}

and that \(\mathbf R\) is determined by the boundary conditions. In particular, the method is stable if the norm of the inverse stays bounded for \(h\to0\text{.}\) As a byproduct, if a method is stable then the global error is of the same order as the local one. Remark: all norms are equivalent in finite dimension, so this fact can be verified in the most convenient one. In this case it is convenient using the spectral norm. Since \(M\) is symmetric, its spectral norm is just its largest eigenvalue in modulus and the norm of \(M^{-1}\) is the inverse of the smallest eigenvalue in modulus of \(M\text{.}\) Hence it is enough to show that the spectrum of \(M\) stays far from the origin as \(h\to0\text{.}\) A direct calculation shows that the eigenvectors of \(M\) are the

\begin{equation*}

u_{i,j}^{p.q} = \sin(p\pi i h)\sin(q\pi jh),\;\;\;\;p,q=1,\dots,N

\end{equation*}

with eigenvalues

\begin{equation*}

\lambda_{p,q} = \frac{2}{h^2}\left[\cos(p\pi h)-1+\cos(q\pi h)-1\right].

\end{equation*}

The smallest in modulus of these eigenvalues is

\begin{equation*}

\lambda_{1,1} = \frac{2}{h^2}\left[\cos(\pi h)-1+\cos(\pi h)-1\right] \simeq -2\pi^2 + O(h^2),

\end{equation*}

so that

\begin{equation*}

\|M^{-1}\|_{sp} = \frac{1}{-2\pi^2 + O(h^2)} = -\frac{1}{2\pi^2} + O(h^2),

\end{equation*}

namely \(M^{-1}\) is bounded in some nbhd of \(h=0\text{.}\) This means that the CXCY method is stable, and therefore necessarily convergent since we already observed that it is consistent.

Evaluating in concrete a numerical solution. We showed above that the 5-points stencil method has local and global order \(4\) in \(\Delta x=\Delta y=h\text{.}\) Finding the actual numerical solution to the Poisson equation \(\Delta u=f\) amounts to solving the linear system

\begin{equation*}

M\mathbf u=\mathbf F\,.

\end{equation*}

At this point, the PDE problem has become a linear algebra numerical problem. Methods to solve numerically linear systems can be found in any numerical anaysis introductory textbook. Below we briefly discuss a few of these methods.

The Jacobi method. Given a linear system

\begin{equation*}

Mv=F

\end{equation*}

where \(v=(x,y,z,\dots)\text{,}\) solve the first equation with respect to \(x\text{,}\) the second with respect to \(y\text{,}\) the third with respect to \(z\) and so on. Now, put sub-indices \(k+1\) to the variables at the left-hand-side and \(k\) to the right-hand-side. The system so obtained defines a recursive iterative process that, under suitable conditions, converges to the solution of the system above for all initial points. In particular, it converges for all diagonally dominant matrices \(M\text{,}\) which happens indeed in this case.

Example. Consider the \(2\times2\) system

\begin{equation*}

\left\{

\begin{aligned}

3x+y=2\\

2x-4y=3\\

\end{aligned}

\right..

\end{equation*}

After the procedure above, we get

\begin{equation*}

\left\{

\begin{aligned}

x_{k+1}=(2-y_k)/3\\

y_{k+1}=(2x_k-3)/4\\

\end{aligned}

\right..

\end{equation*}

Since the matrix \(\begin{pmatrix}3&1\\ 2&-4\\\end{pmatrix}\) is diagonally dominant, this recursive procedure converges to the solution of the system for any initial point, as one can verify with the code below:

Application 1: Electric Potential of a point-like charge. Below we explore numerically the potential generated by a point charge \(q=4\pi\varepsilon\text{,}\) namely

\begin{equation*}

- \Delta V(x,y) = 4\pi\delta(x,y)

\end{equation*}

for all \((x,y)\) in \(S=[-1,1]\times[-1,1]\text{.}\) Usually the potential \(V\) is set to zero at infinity and the solution is the well known potential of a charged point-like particle:

\begin{equation*}

V = \frac{1}{r}.

\end{equation*}

Below we will simply set \(V=0\) on the boundary of \(S\text{.}\)

Application 2: a polynomial case. In the next implementation, we use the Jacobi method to solve an artificial but much simpler elliptic PDE:

\begin{equation*}

-u_{xx}-u_{yy} = 32(x(1-x)+y(1-y)), u_{\partial S}=0

\end{equation*}

whose unique solution is given by

\begin{equation*}

u(x,y) = 16xy(1-x)(1-y).

\end{equation*}

The Gauss-Seidel method. The Gauss-Seidel method works almost exactly as the Jacobi one, except that, when evaluating the \(i\)-th variable, we use the new values of all variables updated to that point. The GS method converges when \(M\) is symmetric or diagonally dominant. For tridiagonal matrices, it is equivalent to Jacobi.

Example. Consider the \(2\times2\) system

\begin{equation*}

\left\{

\begin{aligned}

3x+y=2\\

2x-4y=3\\

\end{aligned}

\right..

\end{equation*}

After the procedure above, we get

\begin{equation*}

\left\{

\begin{aligned}

x_{k+1}=(2-y_k)/3\\

y_{k+1}=(2x_{k+1}-3)/4\\

\end{aligned}

\right..

\end{equation*}

Since the matrix \(\begin{pmatrix}3&1\\ 2&-4\\\end{pmatrix}\) is diagonally dominant, this recursive procedure converges to the solution of the system for any initial point, as one can verify with the code below:

Application 1: Electric Potential of a point-like charge. Below we use the Gauss-Seidel method to solve the PDE

\begin{equation*}

-\Delta V=4\pi\delta(x,y)

\end{equation*}

on the square \(S=[-1,1]^2\) with boundary conditions \(u|_{\partial S}=0\text{.}\) The only difference with the Jacobi version is line 35.

Application 2: a polynomial case. Below we use Gauss-Seidel to solve

\begin{equation*}

-u_{xx}-u_{yy} = 32(x(1-x)+y(1-y)), u_{\partial S}=0

\end{equation*}

The only difference with the Jacobi version is line 40.

Gauss-Seidel with SOR relaxation. Write \(M=L+D+U\text{,}\) with \(D\) diagonal, \(U\) upper and \(L\) lower diagonal. The solution is obtained iteratively by

\begin{equation*}

{\mathbf v}_{k+1} = -(D+\omega L)^{-1}((1-\omega)D-\omega U){\mathbf v}_{k} + (D+\omega L)^{-1}\mathbf R

\end{equation*}

where \(0 < \omega < 2\)(it is sufficient for the convergence that \(A\) is symmetric and positive-definite). In general, the optimal \(\omega\) depends on the boundary conditions.