Section 2.6 Finite Elements Method

Consider a finite dimensional vector space \(V\) with a scalar product \(\langle,\rangle\text{,}\) a linear operator \(A:V\to V\) and a vector \(r\in V\text{.}\) We want to solve the equation

\begin{equation*}

A v = r\,.

\end{equation*}

Recall that every finite dimensional vector space admits bases \(\{e_1,\dots,e_n\}\), \(n=\dim V\text{,}\) so that every \(v\in V\) can be written in a unique way as

\begin{equation*}

v=\sum_{i=1}^n v_i e_i\,.

\end{equation*}

An equivalent formulation. Clearly a vector that is orthogonal to all \(e_i\) must be zero (prove it!), so \(Av=r\) is equivalent to the equation

\begin{equation*}

\langle A v,e_i\rangle = \langle r,e_i\rangle\,,\hbox{ for all }1\leq i\leq n.

\end{equation*}

In turn, this is the same as asking

\begin{equation*}

\langle A v,s\rangle = \langle r,s\rangle\, \hbox{ for all }s\in V.

\end{equation*}

While in finite dimension these two formulations are equivalent, in infinite dimension the latter is weaker, namely it has in principle more solutions than the original one.

Example 1 - ODEs. Consider the space \(V=C^\infty[0,1]\) of all functions \(u:[0,1]\to\Bbb R\) that are continuous on the whole \(D=[0,1]\) together with their derivatives of any order. \(V\) is a vector space since, if \(u,v\in V\text{,}\) then also any linear combination \(\alpha u+\beta v\in V\) for every \(\alpha,\beta\in\Bbb R\text{.}\) The dimension of this space, though is infinite! For example

\begin{equation*}

s_n(x) = \sin(2\pi n x)\,,\;c_n(x)=\cos(2\pi n x)\,,\; n=1,2,3,\dots

\end{equation*}

is a set of infinitely many linearly independent elements of \(V\text{.}\) In fact, every function in \(V\) can be developed in Fourier series:

\begin{equation*}

u=\sum_{n=1}^\infty \left(u_{s,n} s_n+u_{c,n} c_n\right).

\end{equation*}

Moreover, on \(V\) we can define the scalar product

\begin{equation*}

\langle f,g \rangle = \displaystyle\int_0^1\!\!\!f(x)g(x)dx\,.

\end{equation*}

The space \(V\) with the scalar product \(\langle,\rangle\) is a pre-Hilbert space, namely \(V\) is like an Euclidean space but it is not complete (just like \(\Bbb Q^n\) is not complete with respect to the Euclidean distance) because Cauchy sequences \(\{u_n\}\) of \(V\) might not converge to elements of \(V\) (Problem: find a Cauchy seq. of continuous functions converging, w/resp to \(\langle , \rangle\text{,}\) to a function with a finite gap at some point) The completion of \(V\) with respect to \(\langle,\rangle\) is called \(L^2[0,1]\) and is the space of all measurable functions \(u\) such that

\begin{equation*}

\|u\|^2=\int_0^1|u(x)|^2 dx<\infty\,.

\end{equation*}

Consider now the following equation in \(V\text{:}\)

\begin{equation*}

-u''(x)+r(x)u(x)=f(x)\,,\;x\in[0,1]\,,\;\;u(0)=u(1)=0\,,

\end{equation*}

with \(f,r\in V\text{.}\) This is a linear equation in \(V\) and can be written as

\begin{equation*}

Au=f\,,\;u\in C^\infty_0[0,1]

\end{equation*}

where \(A\) is the linear operator from \(V\) to itself

\begin{equation*}

-\frac{d^2}{dx^2}+r(x)

\end{equation*}

and \(C^\infty_0[0,1]\) is the subset of smooth functions that are zero at \(x=0,1\text{.}\) We need a few steps to get to the weak form of this linear equation. First we take the scalar product with a \(s\in C^\infty_0[0,1]\text{,}\) so we get

\begin{equation*}

-\langle u'',s\rangle + \langle r\cdot u, s\rangle = \langle f,s\rangle\,.

\end{equation*}

Then we integrate by parts the first term:

\begin{equation*}

\langle u'',s\rangle = \displaystyle\int_0^1\!\!\!u''(x)s(x)dx = u'(x)s(x)\bigg|^1_0 -

\displaystyle\int_0^1\!\!\!u'(x)s'(x)dx\,,

\end{equation*}

The equation now is

\begin{equation*}

\langle u',s'\rangle + \langle r\cdot u, s\rangle = \langle f,s\rangle\,,\;\hbox{ for all }s\in C^\infty_0[0,1].

\end{equation*}

In this equation all functions appear under integral sign, so there is no actual need to require so much regularity: it is enough to ask that both \(u\) and \(s\) belong to \(H^1_0[0,1]\text{,}\) the space of square-integrable functions whose first derivative is also square-integrable and that converge to \(0\) for \(x\to0^+,1^-\text{.}\) Subsection 2.6.1 Approximating weak solutions

If \(\{\phi_i\}\) is a basis for the subspace \(H^1_0[0,1]\) of all functions of \(L^2[0,1]\) with square-integrble gradient and which tend zero for \(x\to0^+\) and \(x\to1^-\text{,}\) then

\begin{equation*}

\langle u',\phi'_i\rangle+r\langle u,\phi_i\rangle=\langle f,\phi_i\rangle

\end{equation*}

for all \(i\text{.}\) Since \(u=\sum_{i=1}^\infty u_i\phi_i\) and \(f=\sum_{i=1}^\infty f_i\phi_i\) we have that

\begin{equation*}

\sum_{j=1}^\infty u_j\left( \langle \phi'_j,\phi'_i\rangle + r\langle \phi_j,\phi_i\rangle\right)

=

\sum_{j=1}^\infty f_j\langle \phi_j,\phi_i\rangle.

\end{equation*}

By truncating this sum to some \(N\text{,}\) we get that

\begin{equation*}

AU=F

\end{equation*}

form some matrix \(A\) and \(F\text{.}\)

Example 2 - PDEs. Consider the space \(V=C^\infty(S)\text{,}\) where \(S\) is the unit square \([0,1]\times[0,1]\text{,}\) of all functions \(u:S\to\Bbb R\) that are continuous on the whole square together with their derivatives of any order. Again, \(V\) is an infinite dimensional vector space with scalar product

\begin{equation*}

\langle f,g \rangle = \displaystyle\iint_S\!\!\!f(x,y)g(x,y)dxdy\,.

\end{equation*}

Consider now the following equation in \(V\text{:}\)

\begin{equation*}

-u_{xx}(x,y)-u_{yy}(x,y)=f(x,y)\,,\;x\in[0,1]\,,\;\;u=0 on \partial S\,,

\end{equation*}

where \(f\in V\) and \(\partial S\) is the boundary of \(S\text{.}\) This is a linear equation in \(V\) and can be written as

\begin{equation*}

Au=f\,,\;u\in C^\infty_0(S)

\end{equation*}

where \(A\) is the linear operator from \(V\) to itself

\begin{equation*}

-(\partial_{xx}+\partial_{yy})

\end{equation*}

and \(C^\infty_0(S)\) is the subset of smooth functions that are zero at the boundary of \(S\text{.}\) As above, we take the scalar product with \(s\in C^\infty_0(S)\text{,}\) so we get

\begin{equation*}

-\langle u_{xx},s\rangle -\langle u_{yy},s\rangle = \langle f,s\rangle\,.

\end{equation*}

Then we integrate by parts the lhs terms as above:

\begin{equation*}

\langle u_{xx},s\rangle = -\langle u_x,s_x\rangle\,,\;\langle u_{yy},s\rangle = -\langle u_y,s_y\rangle

\end{equation*}

The equation now is

\begin{equation*}

\langle\nabla u,\nabla s\rangle = \langle f,s\rangle\,,\;\hbox{ for all }s\in C^\infty_0(S)

\end{equation*}

Again, in this equation all functions appear under integral sign so there is no actual need to require so much regularity: it is enough to ask that both \(u\) and \(s\) belong to \(H^1_0(S)\text{,}\) the space of \(L^2\) functions on \(D\) whose gradient is also \(L^2\) and that converge to 0 on any sequence of points converging to the boundary. If \(\{\phi_i\}\) is a basis for \(H^1_0(S)\text{,}\) then

\begin{equation*}

\langle \nabla u,\nabla \phi_i\rangle=\langle f,\phi_i\rangle

\end{equation*}

for all \(i\text{.}\) Since \(u=\sum_{i=1}^\infty u_i\phi_i\) and \(f=\sum_{i=1}^\infty f_i\phi_i\) we have that

\begin{equation*}

\sum_{j=1}^\infty u_j \langle \nabla \phi_j,\nabla\phi_i\rangle = \sum_{j=1}^\infty f_j\langle\phi_j,\phi_i\rangle

\end{equation*}

By truncating this sum to some \(N\text{,}\) we get that

\begin{equation*}

AU=F

\end{equation*}

form some matrix \(A\) and \(F\text{.}\)Subsection 2.6.2 Implementation of the Finite Elements Method

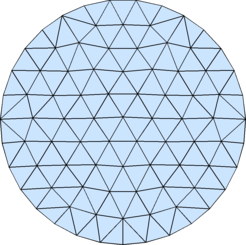

The main goal of the Finite Elements Method is having a method able to work on a domain \(D\) no matter how complicated is its shape, bypassing the Finite Difference limitation to rectangular grids. This is accomplished mainly with the following two points:-

Generating a suitable mesh (possibly tailored on the specifics of the PDE) of \(D\) with \(N\) nodes \(\{p_1,\dots,p_N\}\) and a corresponding set of functions \(\{\phi_1,\dots,\phi_N\}\) such that:

- \(\phi_i(p_j)=\delta_{i,j}\text{;}\)

- \(\phi_i(p_i)=0\) on every mesh polygon not containing \(p_i\text{.}\)

- \(\phi_i\) has an absolute max at \(p_i\) and no other local max or saddle point on the polygons on which is not zero.

Using the PDE's weak version and restricting the eq. to \(\hbox{span}\,\{\phi_1,\dots,\phi_N\}\subset H^1(D)\text{.}\)

- The union \(M_D\) of all \(P_i\)'s is an approximation of \(D\text{,}\) namely the measure of the set of points not in \(M_D\cap D\) is small with respect to the measure of \(D\text{.}\)

- For any \(i\neq j\text{,}\) \(P_i\) and \(P_j\) are either disjoint or share a whole side; in particular, no vertex of one polygon falls in the interior of the side of another one.

m = 10; n = 10; [x,y] = ndgrid((0:m-1)/(m-1),(0:n-1)/(n-1)); pts = [x(:),y(:)]; tri = [1,2,m+2; 1,m+2,m+1]; tri = kron(tri,ones(m-1,1))+kron(ones(size(tri)),(0:m-2)'); tri = kron(tri,ones(n-1,1))+kron(ones(size(tri)),(0:n-2)'*m); trimesh(t,pts(:,1),pts(:,2),zeros(N,1))A great starting point for generating 2D and 3D meshes with any sort off shape is Persson's Distmesh package. Functions Bases. We consider just the simplest type of mesh, where all polygons in a it are triangles. In this type of mesh, a reasonable set of \(\phi_i\) is the set of piece-wise linear functions that, on any triangle \(T\) of the mesh, are either identically zero or linear. Let \((x_i,y_i)\text{,}\) \(i=1,2,3\) be the vertices of \(T\) and \(\psi_i=a_i+b_ix+c_iy\text{,}\) \(i=1,2,3\text{,}\) the restriction to \(T\) of the three basis functions that are not identically zero on \(T\) and such that \(\psi_i(x_j,y_j)=\delta_{ij}\text{.}\) Then let

\begin{equation*}

M_T=

\begin{pmatrix}

1&x_1&y_1\cr

1&x_2&y_2\cr

1&x_3&y_3\cr

\end{pmatrix}\text{.}

\end{equation*}

The coefficients are solutions of

\begin{equation*}

M_T

\begin{pmatrix}

a_1\cr

b_1\cr

c_1\cr

\end{pmatrix}

=

\begin{pmatrix}

1\cr

0\cr

0\cr

\end{pmatrix}

,

M_T

\begin{pmatrix}

a_2\cr

b_2\cr

c_2\cr

\end{pmatrix}

=

\begin{pmatrix}

0\cr

1\cr

0\cr

\end{pmatrix}

,

M_T

\begin{pmatrix}

a_3\cr

b_3\cr

c_3\cr

\end{pmatrix}

=

\begin{pmatrix}

0\cr

0\cr

1\cr

\end{pmatrix}

,

\end{equation*}

namely

\begin{equation*}

\begin{pmatrix}

a_1&a_2&a_3\cr

b_1&b_2&b_3\cr

c_1&c_2&c_3\cr

\end{pmatrix}

=

M^{-1}_T

\end{equation*}

Implementation in MATLAB