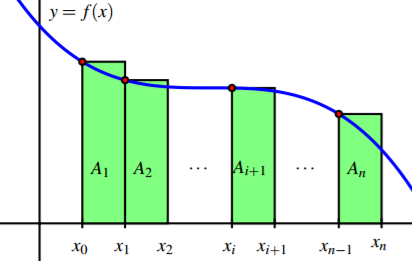

Section 8.1 Riemann sums

The Fundamental Theorem of Calculus tells us that

\begin{equation*}

\int_a^bf(x)dx=F(b)-F(a)

\end{equation*}

for any antiderivative \(F(x)\) of \(f(x)\text{.}\) Unfortunately, though, very few functions have an antiderivative that can be written in terms of elementary functions. For instance, \(f(x)=e^{-x^2}\) does not! The idea of numerical integration is to devise algorithms able to evaluate directly the integral as the area under the graph.

A simple example: Riemann sums. Every student already met such a method in Calculus 1: Riemann sums! For every continuous function (and many others), the Riemann integral can be defined as the limit of the left Riemann sum:

\begin{equation*}

\int_a^bf(x)dx=\lim_{n\to\infty}\left[f(a)+f(a+h)+\dots+f(a+(n-1)h)\right]h,

\end{equation*}

where \(h=(b-a)/n\text{.}\)

\begin{equation*}

L_n=\left[f(a)+f(a+h)+\dots+f(a+(n-1)h)\right]h.

\end{equation*}

Use the interactive frame below to see a visualization of how and why the left Riemann sum approximation works. The parameters you can change are: the number \(n\) of subdivisions of the integration interval; the function \(f\) to be integrated; the integration domain \([a,b]\text{.}\)

Below is a simple Python implementation of this algorithm, used to evaluate the integral of \(\sin x\) from 0 to 2.

Riemann sums error. How can we estimate the error we are making as function of \(n\text{?}\) Within each interval \([a+kh,a+(k+1)h]\text{,}\) the worst that can happen is that \(f\) changes with constant rate of change equal to the maximum value of the derivative in that interval. In fact, to keep formulae simpler, we take the max of \(f'(x)\) over the whole interval \([a,b]\text{.}\) We assume that \(f(x)\) has continuous first derivative, so that we are granted that \(M=\max_{[a,b]}|f'(x)|\) exists. Hence, the largest difference between the value of \(f(x)\) at \(a+kh\) and the one at \(a+(k+1)h\) is \(Mh\text{.}\) In turn, since the length of \([a+kh,a+(k+1)h]\) is \(h\text{,}\) the highest possible error in the estimate of the integral in that interval is the area \(\frac{1}{2}Mh^2\) of the triangle with base \(h\) and height \(Mh\text{.}\) Since we have \(n=\frac{b-a}{h}\) summands, a bound to the total error is

\begin{equation*}

\left|\int_a^bf(x)dx-L_n\right|\leq\frac{b-a}{2}h\max_{[a,b]}|f'(x)|.

\end{equation*}

Convergence Speed. The formula above shows that the truncation error of the Riemann sum is \(O(h^1)\text{.}\) Below we visualize this scaling law by evaluating the error in the computation of \(\int_0^2\sin(x)dx\text{.}\) In this case, due to the slow convergence of this method, it is not possible to set \(h\) smaller than \(10^{-8}\) since otherwise the number of summands becomes too large to handle for Python. The minimum error achievable with this method for this integral is hence of the order of \(10^{-8}\text{.}\) Notice also that, in this range for \(h\text{,}\) the round-off component of the error is not visible.